Artificial intelligence has become increasingly popular for diagnosing illnesses, with ChatGPT being one of the leading AI models in this field. A recent study published in the journal iScience sought to evaluate the accuracy of ChatGPT in diagnosing diseases and providing medical information. Led by research fellow Ahmed Abdeen Hamed from Binghamton University, the study involved testing ChatGPT’s ability to identify disease terms, drug names, genetic information, and symptoms.

Surprisingly, ChatGPT demonstrated high accuracy in identifying disease terms, drug names, and genetic information, with accuracy rates ranging from 88% to 98%. However, when it came to identifying symptoms, the AI model scored lower, ranging from 49% to 61%. One of the reasons for this discrepancy could be attributed to the informal language used by users when describing symptoms, as opposed to the formal terminology used in medical literature.

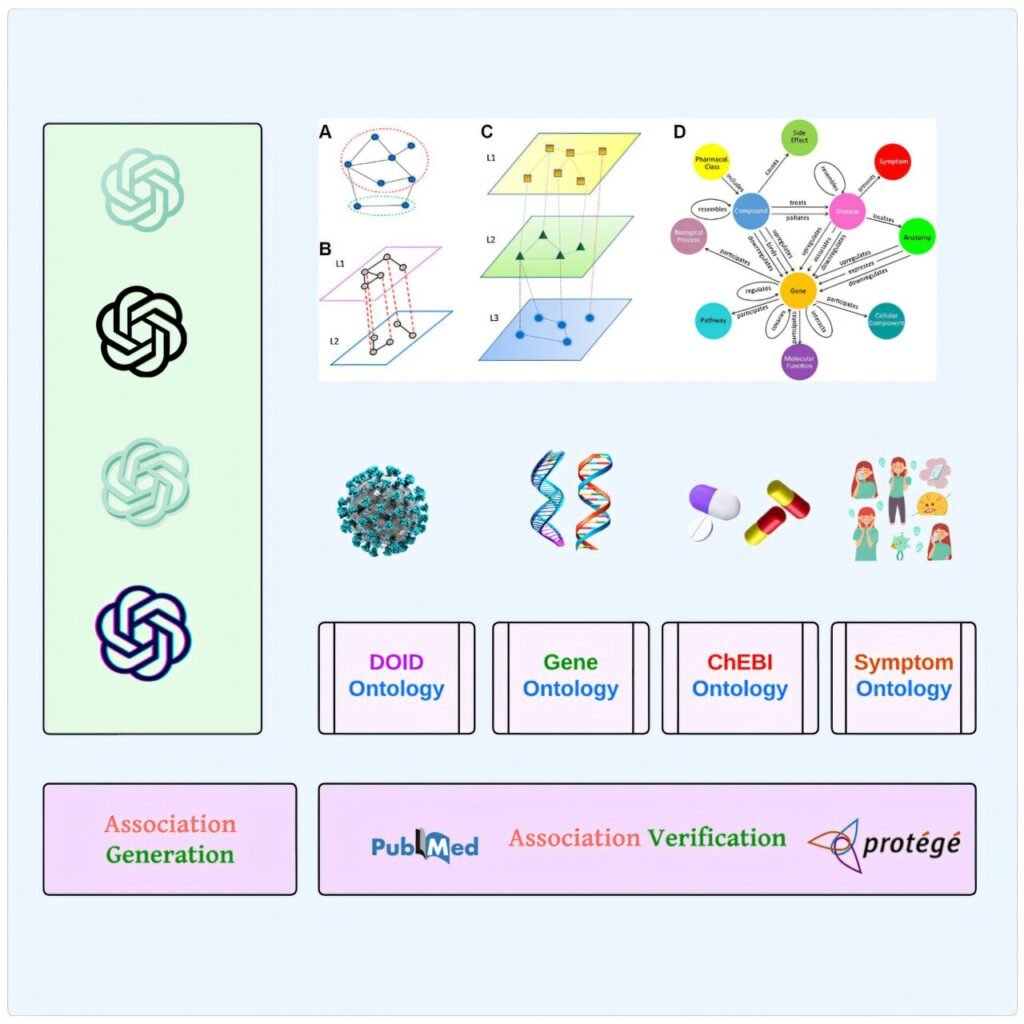

One particularly concerning finding was ChatGPT’s tendency to “hallucinate” when asked for specific genetic information, such as accession numbers from the GenBank database. Hamed highlighted this as a significant flaw in the AI model, suggesting that incorporating biomedical ontologies could improve accuracy and eliminate such errors.

Despite these shortcomings, Hamed remains optimistic about the potential of large language models (LLMs) like ChatGPT in the medical field. By addressing these knowledge gaps and hallucination issues, data scientists can fine-tune these models to make them more reliable and accurate for diagnosing illnesses.

Hamed’s research underscores the importance of verifying the information generated by AI models like ChatGPT, especially when it comes to medical diagnoses. By identifying and rectifying these flaws, researchers can enhance the performance of AI models and ensure that they provide trustworthy and accurate information to users.

For more information on this study, you can refer to the original publication in iScience titled “From knowledge generation to knowledge verification: examining the biomedical generative capabilities of ChatGPT.” DOI: 10.1016/j.isci.2025.112492. This research was conducted in collaboration with AGH University of Krakow, Poland; Howard University; and the University of Vermont.

Overall, while ChatGPT shows promise in diagnosing diseases and providing medical information, there are critical knowledge gaps and hallucination issues that need to be addressed to enhance its accuracy and reliability in the field of healthcare.